A rant on Generative AI & me

I am very much against genAI, especially when it comes to things that are commissionable from humans. AI sucks, yet I use it and services that use it regularly, whether against my will or not.

Why does it suck? If it sucks, why use it? Not sure. It is hard to reconcile my thoughts on this, but let's try, I guess.

I will not pretend to be an expert on things. This is purely an opinion piece based on my impression of it all and where I think it may be going.

Warning, rant ahead.

It's everywhere

Here I am using Windows 11 (but looking to switch for not entirely unrelated reasons) which has been getting more AI integrations throughout it over the last few major versions, having Intellisense/GitHub Copilot autocompletion on in Visual Studio and regularly using platforms like Cloudflare, Google, GitHub, Twitch/Amazon, BlueSky, Takeaway.com, Steam, Discord, both of my local grocery stores, and I'm sure more that I'm not even actively aware of, that are normalizing the use and integration of AI in their products, usually from 3rd-party AI models. You can't get away from it, and it is getting more overbearing by the day.

Why I think AI is bad

First off, I think it is good to lay out why I think AI is bad. There's a lot of reasons why AI sucks, I won't pretend to know all of them or be an expert on any of these, but they are worth mentioning to get a baseline of how I feel about it.

Ethicality

The data these AI models are trained on and the methodology these models use for gathering said data are inherently unethical and the companies behind them aren't much better. AI models are hungry for training data, and these companies will do whatever they can to get as much data as they can. Copyright, licenses and other things humanity developed over the years to stop people from taking eachother's work are often disregarded.

An example as someone who works on and owns a few websites, having a website that might even just be mildly known in niche circles will mean you get crazy amounts of traffic from bots, largely used for gathering AI training data these days. Sometimes malicious AI crawlers come by to yoink all the data they can (including from the big companies) and this can effectively have the effect of getting DDoS'd leading to either downtime, performance issues or large bills. The easiest solution? Cloudflare, which itself also platforms, supports and integrates generative AI.

There is no escaping that in that regard. Fun, right?

Environmental

Another thing these models are hungry for is energy and by extension cooling. Many countries are already struggling with their power grids, here in The Netherlands for example our power grid is 'full' so new homes can't be built during a housing crisis, and despite a ban on new giant datacenters, just because Microsoft put in some plans back in 2016 to build a giant datacenter, it is now getting built and will be slurping up the power equivalent of a city with 90000 homes. Microsoft's core mission in 2026? A heighthened focus on AI.

Misinformation

AI still gets things wrong, and depending on what 'truths' it is trained on, that becomes the new truth.

I'm sure the various companies are trying to correct information where they can, but that then also becomes based on the morals and opinions they may have. Musk for example has repeatedly tried to make Grok less left-leaning, but because facts often are 'leftist' in the current political climate, he keeps failing. In a way that's a good thing, but that also means they have very little control over these models and how hard it is to take certain facts or even 'opinionated' information out.

If they can't make them fully biased for certain political situations, can they even structurally remove actual misinformation at all? Not a clue, again, I'm not an expert, but this example makes me lean towards no.

Education

I sometimes see the equation of AI and the introduction of the calculator. "Well, calculators made math much easier! AI is just like that but for other things too!" You still learn how to do math manually, even with a calculator. But even for me, the math skills in my head have gotten much worse over the years as I've just used Google to do math for me. I can probably manage if you give me some equations and a pen and paper for a while, but not to the level I was able to do back when I was in school.

Now imagine that loss of skill, but for problem solving, making educated decisions or simply on the basis of having knowledge. We'd already be completely and utterly fucked in case a big solar flare knocks out the internet/power for a while, but now imagine how much worse it would be in a generation from now.

Realistically, the human brain, society nor the education system were built with AI in mind. Back when I was a kid I already (incorrectly) thought school was useless. Now, a decade later, why would a kid go to school to learn something if they can just ask AI? If it keeps going the way it is going, I'm worried big education reforms will be needed to even keep kids going to school at all. Terrifying.

Creativity

While AI is greatly affecting the industry I'm in (IT) as well as many other industries, the artists are far more affected by it, at least in my circles. I have friends who are either getting less commissions or none at all anymore due to the influx of AI art. Some have even seen their art slightly altered by someone prompting a model to make some slightly alternations and remove watermarks and then resold. That sucks. Opposed to what AI-bros think, in my opinion, writing prompts does not even remotely require the level of creativity that was required in the making of the art these models will be ripping off making that prompted image a reality.

Employment

I don't think this one needs a lot of explaining. Jobs are being replaced by AI. Sure, AI creates some jobs, but the jobs it displaces, often entry/starter level positions, is far greater, and as AI gets better, the level of work it can do goes up as well, making it much harder for people to get a job at these levels. I don't think even the AI bros disagree with that.

The really ugly stuff

There's a lot of that in the news recently and I don't want to go too much into this, but in particular AIs like Grok are getting used to make particularly bad images, things everyone sane can agree on is bad.

Where AI can do good

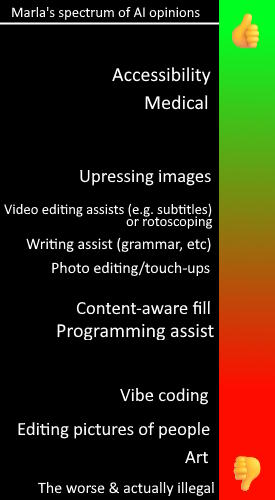

I think I can classify all usage of AI on a spectrum going from "good" to "bad". Obviously, based on the above, most of it is right half in the bad section of the spectrum. For the sake of being fair, there are things where I can imagine it doing good.

Accessibility

AI can greatly assist with various disabilities. It can, in an increasingly better manner, describe things for people who are blind, especially for otherwise inaccessible websites. It can translate on the fly to cross language barriers. It can give people who are motor skill impaired a voice back.

Medical

Yes, this one is scary, but as this is an industry I used to work in I looked into this deeply a few years ago, and even back then there were promising studies that showed that AI can be great at catching things that doctors might have missed on a first glance (aka a second opinion), as long as whatever result is then rechecked by a doctor, and not used instead of one (where it then again would replace a job).

More?

The above two things came from me, but that was it in terms of what I could think of. To at least play a bit of the devil's advocate I tried to find some additional things where AI can do good. One of them that I can definitely imagine working well is that AI is obviously amazing at is going through large sets of data to find unusual outliers, patterns or other things that would take humans a long time to do, which can be great for example in disaster scenarios and relief efforts in emergencies.

I'm sure there's more things it can actually help with that wouldn't be displacing jobs and I'm happy to learn about them to offset the negativity around it in my head, but so far it's been hard to find many more. There are a few things I'm on the fence about like Photoshop's content-aware fill, writing assistance in terms of grammar, etc, but alas.

Reconciling

Around me

With all that out of the way, I have friends and people around me on various Discord servers that are constantly using AI for many things. We won't be less good friends when it comes to using it for programming-related assistance because I use Copilot too, albeit for free. I'll even be glad if it finds an annoying bug, but I will dislike you for vibe-coding (if you don't know what that means, keep reading) and even moreso will actively call you out for generating art with it. I think the latter, in all cases, is a no-go for me.

Am I a hypocrite

But I also feel it may be hypocritical to feel that way about art over any other use of AI. Is programming also not a form of art? They may be entirely different models/methods but these are generally the same companies developing these and making them available through the same frontends (e.g. ChatGPT embeds Sora, Gemini embeds Banana, Grok embeds... whatever they call that horrorshow) and by definition will stick any money they make from using it for reasons I may find ethical into stealing more art from artists. While Anthropic (who make Claude AI) are for example guilty of stealing a whole lot of books (and got sued for it and lost), they haven't stooped as low as image generation based on the work of tons of artists yet -- so I guess they're the least of all evils so far, but even that is debatable and likely temporary. But even then, LLMs are LLMs and Generative AI, is Generative AI.

The spectrum

In the imaginary spectrum I mentioned earlier, I see AI art generation on the far right (excuse the possible coincedences there) of it, vibe coding less on the right but still on the right, etc.

In an attempt to illustrate my feelings, here it is from top-to-bottom for readability (I initially made it left-to-right but figured it would be unreadable):

I'm sure there's things missing, probably even from within this blog post, but it is 7 AM and I want bed.

What is vibe-coding

This one might take some additional explaining. I think there is nuance in how AI can be used in programming, and that is largely describable in "assistance" vs. "vibe-coding".

The difference between programming assistance and vibe-coding to me is a big one. When using it how I use it, I stay in control of the code, I keep understanding what it does and don't let it generate code outside of simple and small enough parts that wouldn't step on anyone's toes when it comes to what the AI models were trained on in terms of licensing.

Vibe-coding on the other hand, to me, is telling e.g. Claude what you want made, and then letting it generate it from scratch from A -> B until there's a finished product, with not a clue what Claude actually wrote or any understanding in terms of possible performance issues, security issues or other bugs. I don't like that.

My line

I would personally draw my line on the spectrum at "Programming assist", but in an as-tame-as-possible way where I only have Claude explain things to me when I'm actually hard-stuck, or to crunch through data that would otherwise take much longer to do. I tend to not let AI write anything beyond basic in-line Copilot/Intellisense autocomplete level-code that I'd also write in a similar fashion myself.

I recognize that while I might pick up on some stuff, I won't learn as much as toiling at a problem myself, but I do have goals to meet and other priorities in life right now outside of my programming projects, so I think that is how I reconcile with my own, arguably already unethical, use of AI in programming assistance.

In closing

There is also an increasingly nagging feeling of not wanting to fall behind my peers, whoms line often appears to be at "Art" on the spectrum. I do try to keep up with what is being developed and generated in AI land beyond where I drew my line and have even tried to see what Claude would do in agent mode for certain scenarios, but that is where my morals (and frankly also a fear of breaking a license/copyright) stop me and I just end up stopping or deleting it. It feels bad and at that point not something I'd really want my name on anymore.

I hope the opions I've landed on in this post end up the right call and this doesn't backfire into me always being passed by Claude (or whatever AI is best in the near future) to the point where holding onto or getting a job at a programmer of my level becomes untenable.

I also worry about generative AI outside of my own field, especially in terms of art and frankly society in general, but time will tell how that ends up going. Good luck, everyone, we'll need it.